Andrea Marcheggiani – a.marcheggiani@pgr.reading.ac.uk

Diabatic processes are typically considered as a source of energy for weather systems and as a primary contributing factor to the maintenance of mid-latitude storm tracks (see Hoskins and Valdes 1990 for some classical reading, but also a more recent reviews, e.g. Chang et al. 2002). However, surface heat exchanges do not necessarily act as a fuel for the evolution of weather systems: the effects of surface heat fluxes and their coupling with lower-tropospheric flow can be detrimental to the potential energy available for systems to grow. Indeed, the magnitude and sign of their effects depend on the different time (e.g., synoptic, seasonal) and length (e.g., global, zonal, local) scales which these effects unfold at.

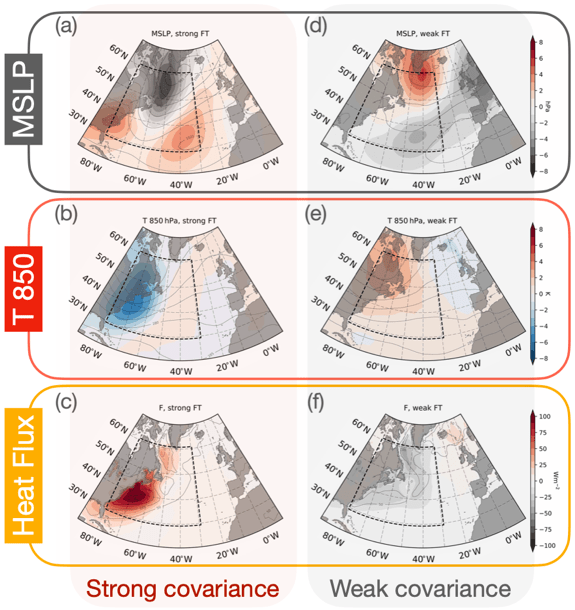

Figure 1: Composites for strong (a-c) and weak (d-f) values of the covariance between heat flux and temperature time anomalies.

Heat fluxes arise in response to thermal imbalances which they attempt to neutralise. In the atmosphere, the primary thermal imbalances that are observed correspond with the meridional temperature gradient caused by the equator—poles differential radiative heating from the Sun, and the temperature contrasts at the air—sea interface which essentially derives from the different heat capacities of the oceans and the atmosphere.

In the context of the energetic scheme of the atmosphere, which was first formulated by Lorenz (1955) and commonly known as Lorenz energy cycle, the meridional transport of heat (or dry static energy) is associated with conversion of zonal available potential energy to eddy available potential energy, while diabatic processes at the surface coincide with generation of eddy available potential energy.

The sign of the contribution from surface heat exchanges to the evolution on weather systems is not univocal, as it depends on the specific framework which is used to evaluate their effects. Globally, these have been estimated to have a positive effect on the potential energy budget (Peixoto and Oort, 1992) while locally the picture is less clear, as heating where it is cold and cooling where it is warm would lead to a reduction in temperature variance, which is essentially available potential energy.

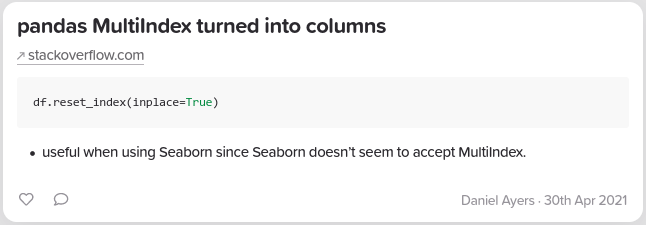

The first part of my PhD focussed on assessing the role of local air—sea heat exchanges on the evolution of synoptic systems. To that extent, we built a hybrid framework where the spatial covariance between time anomalies of sensible heat flux F and lower-tropospheric air temperature T is taken as a measure of the intensity of the air—sea thermal coupling. The time anomalies, denoted by a prime, are defined as departures from a 10-day running mean so that we can concentrate on synoptic variability (Athanasiadis and Ambaum, 2009). The spatial domain where we compute the spatial covariance extends from 30°N to 60°N and from 30°W to 79.5°W, which corresponds with the Gulf Stream extension region, and to focus on air—sea interaction, we excluded grid points covered by land or ice.

This leaves us with a time series for F’—T’ spatial covariance, which we also refer to as FT index.

The FT index is found to be always positive and characterised by frequent bursts of intense activity (or peaks). Composite analysis, shown in Figure 1 for mean sea level pressure (a,d), temperature at 850hPa (b,e) and surface sensible heat flux (c,f), indicates that peaks of the FT index (panels a—c) correspond with intense weather activity in the spatial domain considered (dashed box in Figure 1) while a more settled weather pattern is observed to be typical when the FT index is weak (panels d—f).

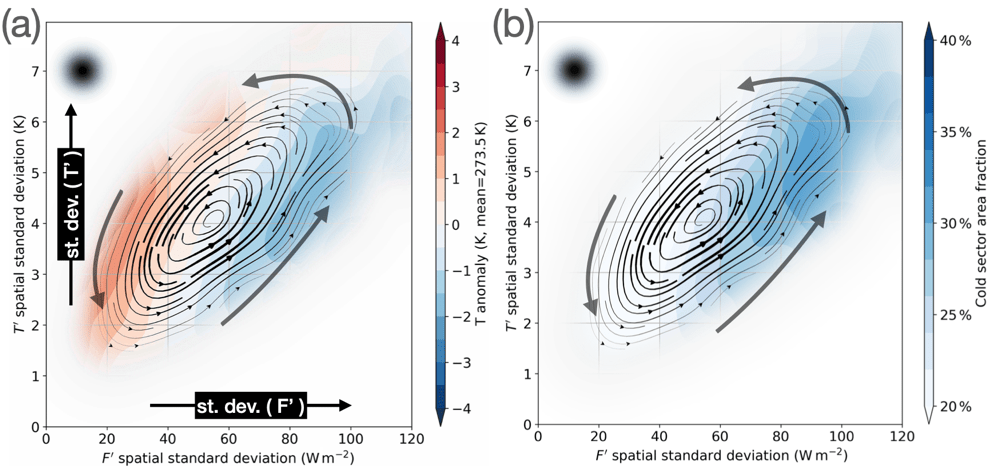

Figure 3: Phase portraits for spatial-mean T (a) and cold sector area fraction (b). Shading in (a) represents the difference between phase tendency and the mean value of T, as reported next to the colour bar. Arrows highlight the direction of the circulation, kernel-averaged using the Gaussian kernel shown in the top-left corner of each panel.

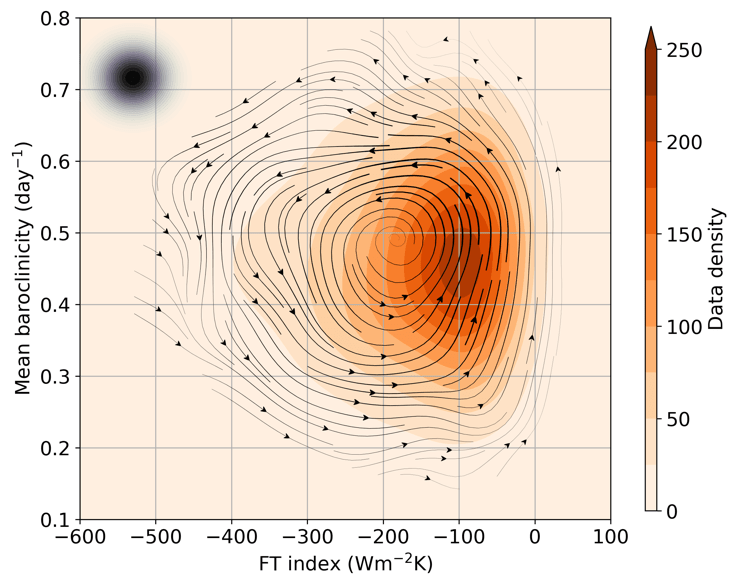

We examine the dynamical relationship between the FT index and the area-mean baroclinicity, which is a measure of available potential energy in the spatial domain. To do that, we construct a phase space of FT index and baroclinicity and study the average circulation traced by the time series for the two dynamical variables. The resulting phase portrait is shown in Figure 2. For technical details on phase space analysis refer to Novak et al. (2017), while for more examples of its use see Marcheggiani and Ambaum (2020) or Yano et al. (2020). We observe that, on average, baroclinicity is strongly depleted during events of strong F’—T’ covariance and it recovers primarily when covariance is weak. This points to the idea that events of strong thermal coupling between the surface and the lower troposphere are on average associated with a reduction in baroclinicity, thus acting as a sink of energy in the evolution of storms and, more generally, storm tracks.

Upon investigation of the driving mechanisms that lead to a strong F’—T’ spatial covariance, we find that increases in variances and correlation are equally important and that appears to be a more general feature of heat fluxes in the atmosphere, as more recent results appear to indicate (which is the focus of the second part of my PhD).

In the case of surface heat fluxes, cold sector dynamics play a fundamental role in driving the increase of correlation: when cold air is advected over the ocean surface, flux variance amplifies in response to the stark temperature contrasts at the air—sea interface as the ocean surface temperature field features a higher degree of spatial variability linked to the presence of both the Gulf Stream on the large scale and oceanic eddies on the mesoscale (up to 100 km).

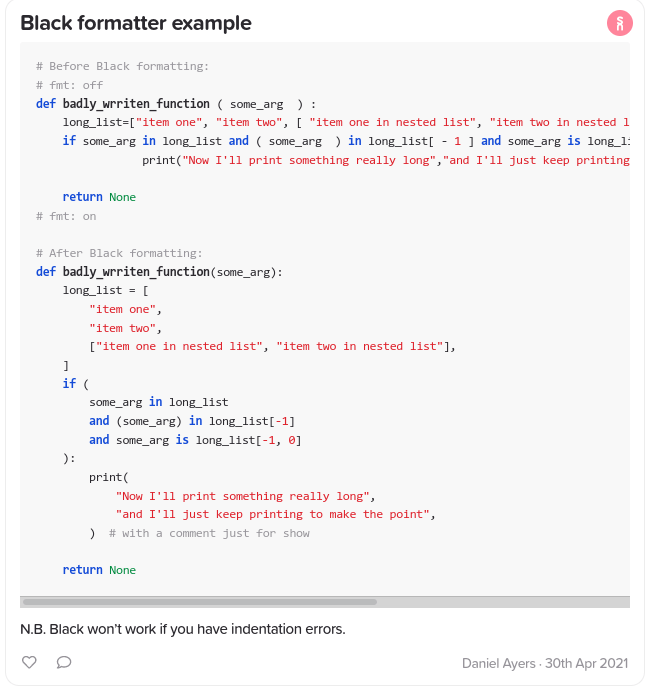

The growing relative importance of the cold sector in the intensification phase of the F’—T’ spatial covariance can also be revealed by looking at the phase portraits for air temperature and cold sector area fraction, which is shown in Figure 3. These phase portraits tell us how these fields vary at different points in the phase space of surface heat flux and air temperature spatial standard deviations (which correspond to the horizontal and vertical axes, respectively). Lower temperatures and larger cold sector area fraction characterise the increase in covariance, while the opposite trend is observed in the decaying stage.

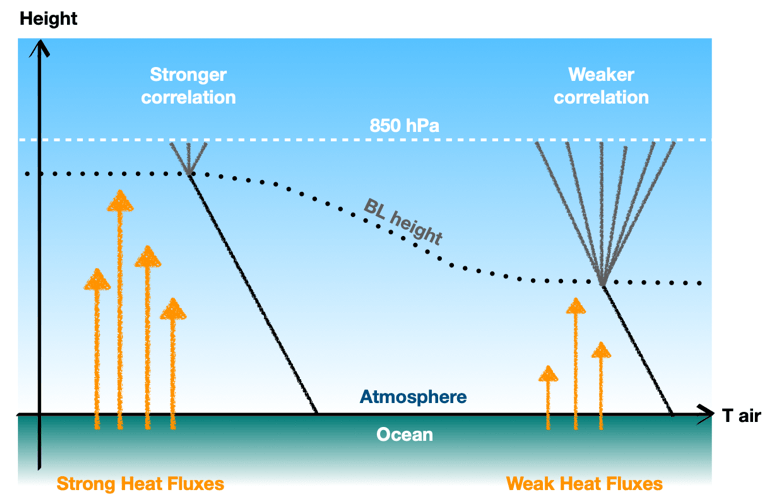

Surface heat fluxes eventually trigger an increase in temperature variance, which within the atmospheric boundary layer follows an almost adiabatic vertical profile which is characteristic of the mixed layer (Stull, 2012).

Stronger surface heat fluxes are associated with a deeper boundary layer reaching higher levels into the troposphere: this could explain the observed increase in correlation as the lower-tropospheric air temperatures become more strongly coupled with the surface, while a lower correlation with the surface ensues when the boundary layer is shallow and surface heat flux are weak. Figure 4 shows a simple diagram summarising the mechanisms described above.

In conclusion, we showed that surface heat fluxes locally can have a damping effect on the evolution of mid-latitude weather systems, as the covariation of surface heat flux and air temperature in the lower troposphere corresponds with a decrease in the available potential energy.

Results indicate that most of this thermodynamically active heat exchange is realised within the cold sector of weather systems, specifically as the atmospheric boundary layer deepens and exerts a deeper influence upon the tropospheric circulation.

References

- Athanasiadis, P. J. and Ambaum, M. H. P.: Linear Contributions of Different Time Scales to Teleconnectivity, J. Climate, 22, 3720– 3728, 2009.

- Chang, E. K., Lee, S., and Swanson, K. L.: Storm track dynamics, J. Climate, 15, 2163–2183, 2002.

- Hoskins, B. J. and Valdes, P. J.: On the existence of storm-tracks, J. Atmos. Sci., 47, 1854–1864, 1990.

- Lorenz, E. N.: Available potential energy and the maintenance of the general circulation, Tellus, 7, 157–167, 1955.

- Marcheggiani, A. and Ambaum, M. H. P.: The role of heat-flux–temperature covariance in the evolution of weather systems, Weather and Climate Dynamics, 1, 701–713, 2020.

- Novak, L., Ambaum, M. H. P., and Tailleux, R.: Marginal stability and predator–prey behaviour within storm tracks, Q. J. Roy. Meteorol. Soc., 143, 1421–1433, 2017.

- Peixoto, J. P. and Oort, A. H.: Physics of climate, American Institute of Physics, New York, NY, USA, 1992.

- Stull, R. B.: Mean boundary layer characteristics, In: An Introduction to Boundary Layer Meteorology, Springer, Dordrecht, Germany, 1–27, 1988.

- Yano, J., Ambaum, M. H. P., Dacre, H., and Manzato, A.: A dynamical—system description of precipitation over the tropics and the midlatitudes, Tellus A: Dynamic Meteorology and Oceanography, 72, 1–17, 2020.